When to use threads:

-You should use multithreading when you want to perform heavy operations without "blocking" the flow.

- from https://www.cs.mtu.edu/~shene/NSF-3/e-Book/FUNDAMENTALS/threads.html (best)

Reference:

http://www.yoda.arachsys.com/csharp/threads/whentouse.shtml

https://www.ijarcsse.com/docs/papers/Volume_6/6_June2016/V6I6-0212.pdf

http://stackoverflow.com/questions/2857184/when-should-i-use-threads

http://stackoverflow.com/questions/617787/why-should-i-use-a-thread-vs-using-a-process

http://www.drdobbs.com/parallel/the-pillars-of-concurrency/200001985

https://techiecode.wordpress.com/2013/05/11/differences-between-dual-core-and-single-core-processor/

-You should use multithreading when you want to perform heavy operations without "blocking" the flow.

Example in UIs where you do a heavy processing in a background thread but the UI is still active.

If the threads execute mutually exclusive tasks it is the best since there is no overhead for synchronization among threads needed

- from : http://www.yoda.arachsys.com/csharp/threads/whentouse.shtml (best)

I've written a lot now about how to use threads, but not when to use threads - when it's appropriate to and when it's better to keep everything in the same thread. This is partly because after a while it becomes fairly natural to work out what belongs where, and partly because it's quite tricky to actually describe.

There are a few times when there's absolutely no point in using multiple threads. For instance, if your application is bound by a single resource (e.g. the disk, or the CPU) and all the tasks you would use multiple threads for will all be trying to use that same resource, you'll just be adding contention. For instance, suppose you had an application which collected all the names of files on your disk. Splitting that job into multiple threads isn't likely to help - if anything, it'll make it worse, because it would be asking the file system for lots of different directories all at the same time, which could make the head seek all over the place instead of progressing steadily. Similarly, if you have workflow where each stage relies entirely on the results of the previous stage, you can't use threads effectively. For instance, if you have a program which loads an image, rotates it, then scales it, then turns it into black and white, then saves it again, each stage really needs the previous one to be finished before it can do anything.

Suppose, however, you wanted to read a bunch of files and then process their contents (e.g. calculating the cryptographic hash using an algorithm which takes a lot of processor power) then that might very well benefit from threading - either by having several threads doing both, or one thread dedicated to disk IO and another dedicated to hash calculation. The latter would probably be better, but would probably involve more work as the threads would need to be passing data to each other rather than just doing their own thing. Even though here there is still a dependency between the data being read from disk and the crypto processing, you don't have to read all the data from the disk before you can start processing it.

Similarly, in applications other than Windows Forms applications, it probably doesn't matter if you need to do something which will take a little while - a second or so, for instance. If you're writing a batch program, it doesn't matter in the slightest whether your code is always doing something different or whether some operations take longer than others. That's not to say that batch processing should always be single-threaded, but you don't need to worry about having a "main" thread which must be ready to react to events.

If you're writing an ASP.NET page, then unless you think the operation will take so long that you're willing to start another thread and send a page back to the user which tells them to wait and then refreshes itself (using an HTTP meta refresh tag, for instance) periodically to check whether the "real" page has finished, it's usually not worth changing to a different thread. It won't make the page come up any faster, as you'll just have to tell the "main" thread to wait for the other one to finish, and it'll be considerably more complicated to implement. Of course, the part about not being any faster isn't true if you genuinely can do two independent things at once - querying two different databases, for instance. In that case, threading can occasionally be very useful even in ASP.NET scenarios, although you should consider using the thread pool for such tasks to avoid creating lots of threads which each only run for a short time. Don't forget that there may well be other requests which want to use the same resources, and you won't be doing less work in total by spreading it out over many threads. In short, while creating extra threads (or explicitly using the thread pool) is sometimes useful in ASP.NET, it's usually a last resort rather than a matter of course.

In Windows Forms applications, you really should put anything which takes any significant amount of time (even reading a short file) into a different thread, unless the code is really only for your own use and you don't mind an unresponsive UI. This is important, as while the UI thread is doing something else (reading a file, doing a heavy calculation, etc) it can't be reacting to events like the user trying to close it, or a previously hidden area now becoming exposed. The UI can easily become very unresponsive, which gives a horrible user experience. Here, threading isn't used to get the job done quickly - it's used to get the job done while keeping the user satisfied with responsiveness. You might be surprised just how quickly a user can notice an app becoming unresponsive. Even if the user can't actually do anything but close the application or move the window around while they wait, it gives a much more professional feel to a program if you don't end up with a big white box when you pass another window over it.

The choice between using a "new" thread and using one from the thread pool is a contentious one - I tend to like using new threads for most purposes, and others recommend almost always using thread pool threads. My concern about using the thread pool is that there are only a certain number of threads in it, and it's used in various ways by the framework itself - and it's not always obvious that it's doing so. If you accidentally end up with all the threads in the thread pool waiting for other work items which are scheduled to run in the thread pool, you'll have a very difficult deadlock to debug.

Using new threads, however, is relatively costly if they're only going to run for a short while - creating new threads isn't terribly cheap at the operating system level, whereas the thread pool will of course re-use threads to avoid repeating this cost. One happy medium is to use your own thread pool which is separate from the system one but which will still re-use threads. I have a fairly simple implementation in my Miscellaneous Utility Library which you can tweak if it doesn't quite meet your requirements. It probably won't perform quite as well as the system thread pool which has been finely tuned - but you have a lot more control over what goes in it, how many threads it creates, etc.

Using multiple threads is almost always going to introduce complexity to your application, so the potential benefits should be carefully considered and weighed up before you start writing code. Work out the "boundaries" between threads in detail - what thread needs access to what data when, etc. This can make an enormous difference when it comes to the implementation. After making the decision to use threads and designing the threading scenarios carefully, keep taking care while writing the code - it's very easy to slip up, unfortunately, even with all the tools the .NET framework provides. The results of this work should be an elegant application which performs well and remains responsive whatever it's doing - something to feel justly proud of. Good luck!

- from https://www.cs.mtu.edu/~shene/NSF-3/e-Book/FUNDAMENTALS/threads.html (best)

Some years ago I saw a letter-to-the-editor in response to the need of a multitasking system, the writer said ``I don't care about multitasking because I can only do one thing at a time.'' Really? Does this person only do one thing at a time? This person continues with ``I finish my Word document, print it, fire up my modem to connect to the Internet, read my e-mail, and go back to work on another document.'' Does this person efficiently use his time? Many of us might suggest that this person could fire up his modem while the printer is printing, or work on another document while the previous one is being printed. Good suggestions, indeed. In fact, we are multitasking many tasks in our daily life. For example, you might watch your favorite TV program or movie and enjoy your popcorn. Or, while you are printing a long document, you might read a newspaper or company news. There are so many such examples demonstrating that we are doing two or more tasks simultaneously. This is a form of multitasking! In fact, multitasking is more common in industry. While each worker of an assembly line seems working in a sequential way, there could be multiple production lines, all of which perform the same task concurrently. Moreover, the engine assembly lines produce engines while the other lines produce other components. Of course, the car assembly lines run concurrently with all of the other lines. The final product is the result of these concurrently running assembly/production lines. Without this type of ``parallelism'' Detroit would not be able to produce sufficient airplanes and tanks for WW II and enough number of automobiles to fulfill our demand.

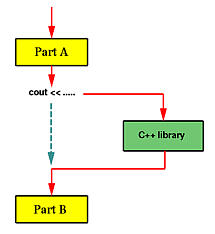

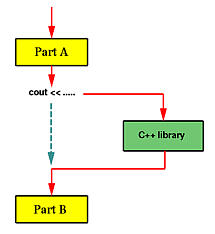

Unfortunately, before you learn how to split your program into multiple execution threads, all programs you wrote contain a single execution thread. The following diagram shows an example. Suppose we have a program of two parts, Part A and Part B. After Part A finishes its computation, we use some cout statements to print out a large amount of output. As we all know, when a program prints, the control is transfered to a function in C++'s library and the execution of that program is essentially suspended, shown in dashed line in the diagram, until the printing completes. Once this (i.e., printing) is done, the execution of the program resumes and starts the computation of Part B. Is there anything wrong with this? No, we are used to it and we are trained to do programming in this way ever since CS101. However, is this way of programming good enough in terms of efficiency? It depends; but, in many situations, it is not good enough.

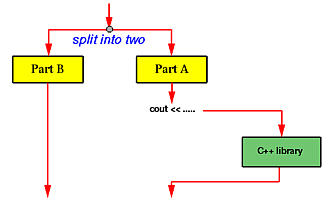

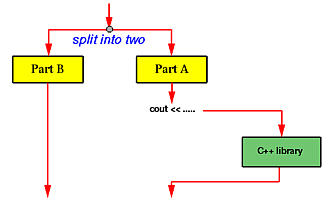

If Part B must use some data generated by Part A, then Part B perhaps has to be executed after the output of cout completes. On the other hand, in many situations, Part A and Part B are independent of each other, or one may slightly rewrite both parts so that they do not depend on each other. In this case, Part B does not have to wait until cout completes. In fact, this is the key point! Therefore, before the execution of Part A, the program can be split into two execution threads, one for Part A and the other for Part B. See the diagram below. In this way, both execution threads share the CPU and all resources allocated to the program. Moreover, while Part A is performing the output which causes Part A to wait, Part B can take the CPU and executes. As a result, this version is more efficient than the previous one. Moreover, in a system with more than one CPUs, it is possible that the system will run both Part A and Part B at the same time, one on each CPU.

In real programming practice, a program may use an execution thread for handling keyboard/mouse input, a second execution thread for handling screen updates, and a number of other threads for carrying out various computation tasks.

Reference:

http://www.yoda.arachsys.com/csharp/threads/whentouse.shtml

https://www.ijarcsse.com/docs/papers/Volume_6/6_June2016/V6I6-0212.pdf

http://stackoverflow.com/questions/2857184/when-should-i-use-threads

http://stackoverflow.com/questions/617787/why-should-i-use-a-thread-vs-using-a-process

http://www.drdobbs.com/parallel/the-pillars-of-concurrency/200001985

https://techiecode.wordpress.com/2013/05/11/differences-between-dual-core-and-single-core-processor/

No comments:

Post a Comment